Why Does Backpropagation Work? A Journey Through Mirrors, Messages, and Mistakes

"A Mirror that Teaches You"

What if mistakes could talk?

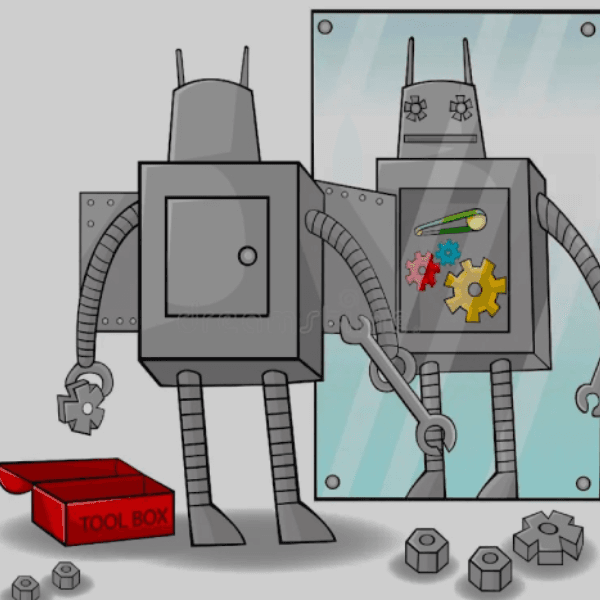

Imagine you're a robot — not the flashy kind with lasers and one-liners, but the kind built to learn. You try to do something: say, lift a cup. But you miss. Slightly off. Not terrible, just… not right.

Now picture this: You look in the mirror, and your reflection shows you exactly what went wrong.

🔧 One gear turned too early.

⚙️ Another one too slow.

💡 A tiny lever flicked when it shouldn't have.

And instead of just shrugging it off, your reflection quietly points to each of those errors — and hands you the precise tool to tweak them.

That's the spirit of backpropagation.

It's not punishment. It's not guesswork.

It's feedback — personalized, surgical, silent.

A mirror that doesn't just show you your flaws… but teaches you how to fix them.

The Message Chain Analogy

Remember playing the game "Telephone" as a kid?

You start with a message — maybe something like "Bananas are cool" — and whisper it to the person next to you. They whisper it to the next, and so on, until it reaches the final person.

Except… when it does, the message is now "Pajamas on a mule."

What happened?

Somewhere along the line, things got muddled. Misheard. Mispassed.

That's the forward pass in a neural network:

The input flows through each neuron — layer by layer — transforming a little at each stop.

But by the time it reaches the output, maybe it's not quite what we hoped.

So what do we do?

We reverse the chain — not to resend the message, but to trace the distortion.

We go backward, asking each neuron:

"Hey, how much of that final mess was your fault?"

"If you had nudged just a little differently, would the message have been clearer?"

Each neuron doesn't get yelled at.

It just gets a gentle nudge — a whispered correction.

A tweak in behavior for next time.

That's the backward pass — the magic of backpropagation.

Not just passing messages forward, but learning from how they get lost along the way.

Intuition — Local Corrections with Global Impact

Imagine you're part of a football team. You just lost a match. Ouch.

Now, the coach brings everyone into the locker room and plays the match footage. He pauses the video at critical moments and says things like,

"See here? The left flank was wide open."

"Our midfield didn't press early enough."

You're not being singled out, but as a player, you naturally ask:

"Was that gap because of me?"

"Could I have stepped up a second earlier?"

Each teammate starts thinking about how their individual movement may have influenced the team's outcome.

And in the next match? Everyone slightly adjusts. Not dramatically. Just enough. A tighter pass here, a quicker move there.

Now swap out "players" with "neurons" in a deep learning model.

After a bad prediction, each neuron doesn't panic — it simply whispers to itself:

"Was it me?"

And if the math says "maybe just a little," it shifts its internal weight a tiny bit.

These microscopic nudges ripple throughout the entire network, eventually correcting the overall behavior.

Just like a team gradually learns to play better — not because one person became a superstar, but because everyone nudged their instincts based on the game's outcome.

The Math — Gradient Descent and Chain Rule

So far, we've been talking about backpropagation like it's a coaching system, a mirror, or a replay room. But underneath all those analogies lives some very elegant math that tells each weight in the network, "Hey, move this much in this direction."

Let’s crack that open.

Imagine you're standing on a hill with fog all around — you can't see the bottom, but you can feel the slope under your feet. Naturally, you'd start walking downhill, following the steepest path. That's gradient descent.

The gradient tells you the direction of steepest increase.

To minimize error, we take the negative gradient — the path of steepest descent.

Every weight in a neural network is like a dial or knob on a giant soundboard. You're trying to tune the output to sound just right, but you can't just turn knobs randomly. The gradient tells you how each knob affects the volume of the wrongness (the loss). Turn each one a little, and see if the output gets better.

Here's where the chain rule steps in. The chain rule is like tracing blame backward through a group project:

"The final report was bad. Was it the conclusion? The analysis? The data collection?"

Each layer contributes to the final output, and the chain rule helps us pass the feedback (the blame) backward, one step at a time, calculating how much each layer is responsible.

Put simply:

- Loss = How bad the output was

- Gradient = Who should take how much blame

- Chain Rule = The messenger that delivers the blame all the way down the line

- Gradient Descent = Everyone tuning their dials a bit to fix the final outcome

The math of backprop might look scary in symbols, but it's just a beautifully choreographed team of tiny knobs adjusting themselves quietly, one by one, all so the next output sounds a bit less off-key.

The Core Equation Unveiled

Okay, time to pop the hood and look at the actual engine behind backpropagation.

Here’s the equation that powers it all:

If that looks intimidating, don’t worry — we’re not here to "solve" it, but to understand what it’s really saying.

Think of it like a blame game.

You’re trying to figure out:

"How much did this specific weight (w) contribute to the final mistake (L)?"

But the weight didn’t act alone.

First, the weight influenced the neuron's internal calculation (z).

Then, that internal value passed through an activation (a).

Then, the activation affected the output, which caused a loss.

So, to fairly assign responsibility, we chain the effects together, like passing blame down a line:

- ∂L/∂a: How much the final loss changes with respect to the activation

- ∂a/∂z: How much the activation changes with respect to the internal value

- ∂z/∂w: How much that internal value changes with respect to the actual weight

Multiply them together, and you get how much the weight ultimately influenced the loss.

Let’s break it down with a relatable analogy.

Imagine a chef in a kitchen.

The taste of the dish (L) was bad.

That’s partly because the sauce (a) was off.

But the sauce was off because the heat (z) was too high.

And the heat was too high because the knob (w) was turned too far.

So you ask: "How much is that knob (w) responsible for ruining the dish (L)?"

Answer: This chain of influence is exactly what the chain rule captures.

It’s how calculus lets us trace cause and effect across multiple layers.

TL;DR:

"Your weight influenced this activation, which influenced this output, which caused this loss. Here's your share of the blame."

That’s it. That’s backpropagation’s core.

A quiet accountant walking backward through the network, tallying up who owes what.

Wrap-up — The Silent Teacher

You never hear it speak.

No applause, no explanations, no moral lessons.

Just… correction.

Backpropagation doesn’t try to "teach" in the human sense.

It simply shows each weight:

"Here’s what went wrong, and here’s your part in it."

And that’s it.

Over time, with enough examples, these quiet corrections start to add up.

The network starts to get it.

Like a pianist refining finger placement after each wrong note, or an athlete adjusting posture after a missed goal — no lecture needed.

It’s elegant. It’s mechanical.

And somehow… it works.

So the next time you hear "backpropagation," don’t picture math flying through wires.

Picture a mirror. A message passed through a chain.

A coach pointing to a heatmap.

A silent system, learning by listening to its own mistakes.

Bonus — What If You Learned This Way?

Let’s flip the roles.

Imagine if you learned like a neural network.

No explanations. No feedback forms.

Just a score at the end — and a quiet nudge telling you how to adjust one tiny thing in your thought process.

You don’t know what the right answer was.

You only know how wrong you were, and how to be a bit less wrong next time.

It sounds cold…

Yet that’s how every deep learning breakthrough you’ve read about was trained.

Not by understanding.

But by correction.

And maybe, just maybe — that’s worth reflecting on.

☕ Enjoyed the read?

You can Buy me a coffee to "Keep me caffeinated so I can write more!" Thank you! 💙

Also Published on Medium

Prefer reading on Medium?